Rabbit Holes of Live Issues

written by Josh Ketchum

|November 2017

Slow Performance, Memory Issues, Robots, and Test Cases

Some time ago, I experienced the excitement of live website performance issues: performance randomly decreasing, general slowness, and memory issues. Which was happening more and more often now that it had a new users... To the point that the website just froze completely and was unresponsive, and just kept spinning. Slowly, an issue that happened not-at-all suddenly started happening from once a month, to a few times a week, to a few times a day.

Some time ago, I experienced the excitement of live website performance issues: performance randomly decreasing, general slowness, and memory issues. Which was happening more and more often now that it had a new users... To the point that the website just froze completely and was unresponsive, and just kept spinning. Slowly, an issue that happened not-at-all suddenly started happening from once a month, to a few times a week, to a few times a day.

During these "unstable times," the entire website would be inaccessible to everyone after some time. And the only thing that could be done to bring it back online was to do a few iisresets or restart the server completely to get the website to wake up again.

When I was looking into this issue, I had a few theories. Some of them were possible issue with server setup, memory usage, and many people using the website at once. It was the busiest season for the company, after all, and they had many new customers. The time frames that it would go down were during times when there could have been a lot of activity, such as a Friday afternoon or Monday afternoon.

So, I started researching. #beginthesearch

Rabbit Hole #1: Too Much Activity / Memory Issues?

To try and prove this first theory and find a solution, I had to gather some resources.

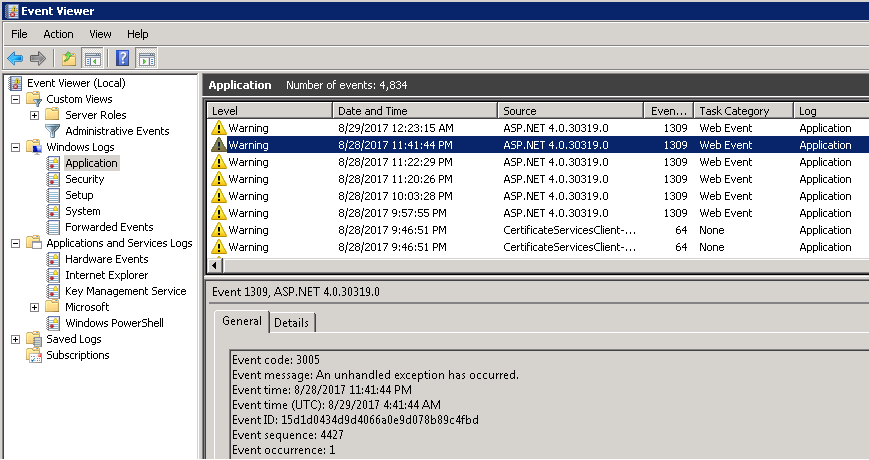

First, I opened the Event Viewer to look at the Event Logs of the application. Surely something would give me some leads there.

And I did find some leads...

There were numerous logs of "Out of Memory" for the application during the times the website going down. In fact, with majority of the instances looked into, there were "Out of Memory" errors in the event history that line up to the downtimes that the website experienced.

With these time frames, I looked at the Application Logs of the website to see what kind of activity was happening during those times. Nothing seemed very "out of the ordinary" though... The application did use realtime price calls to get prices for products, and those were being logged around those times. But the ENTIRE logs were filled with realtime price call logs, and during these down-time time frames, the log quantity was not more and didn't spike more than other times throughout the day....

Memory was in fact an issue during these times, but nothing led me to believe it was necessarily from "too much activity" of calls on the website related to products and pricing. So I continued looking.

Rabbit Hole #2: Robots Hitting Website?

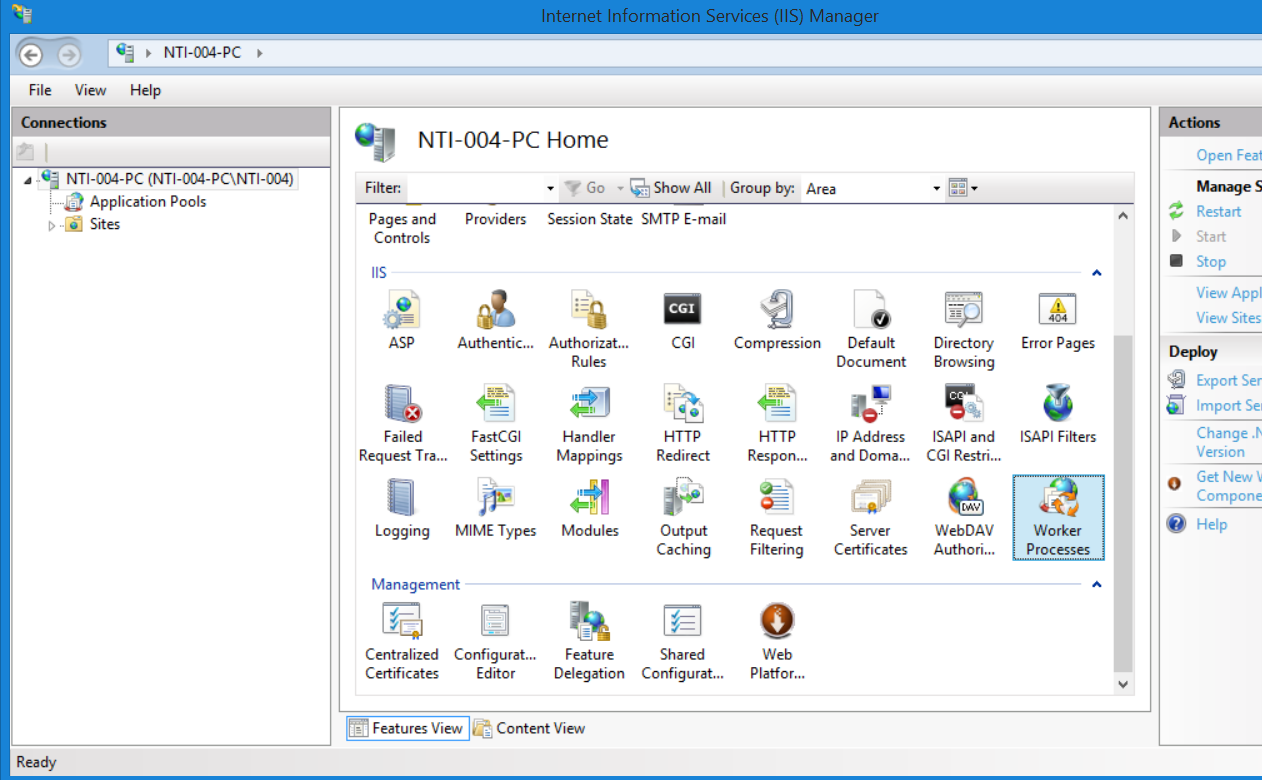

I decided to look at another place that is a common "look-here-if-there-is-an-issue" location: the Worker Processes.

The Worker Processes can be found in IIS Manager, as seen below.

After you select it, you can then select which Application Pool to view, and then see the current calls happening on the website at that moment.

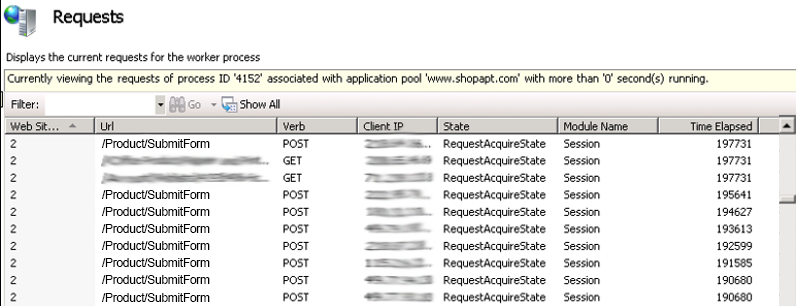

The first time I checked this, nothing seemed out of the ordinary. However, after some time, I checked it again and saw hundreds of "Submit a Form" requests, whose action actually submitted a generated email... A robot had taken over a form submission and using it to send spam emails. #dangerous

This was a big issue. Microsoft had actually flagged their no-reply email address just last week... However, this issue was a new issue that happened recently over the past week...

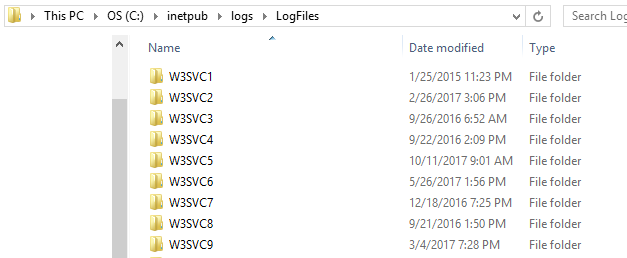

I checked a new location I hadn't yet checked, the C:\inetpub\logs\LogFiles to see the exact requests that were going to the website.

Using Notepad++ to search these log files, I found the calls happening 1k+ times one day, 10k+ another day, and 5k+ another day. None of these calls lined up to the original down-time's time frame, but was still, irregardless, an issue that needed to be fixed.

This particular issue was fixed by removing the form (although a ReCaptcha is a future suggestion).

However, the original issue still existed: Randomly, the website was still going down.

Rabbit Hole #3: Unsupported Version of Unused Product?

I took my researching elsewhere, and began looking at memory dumps (after I first Created Them).

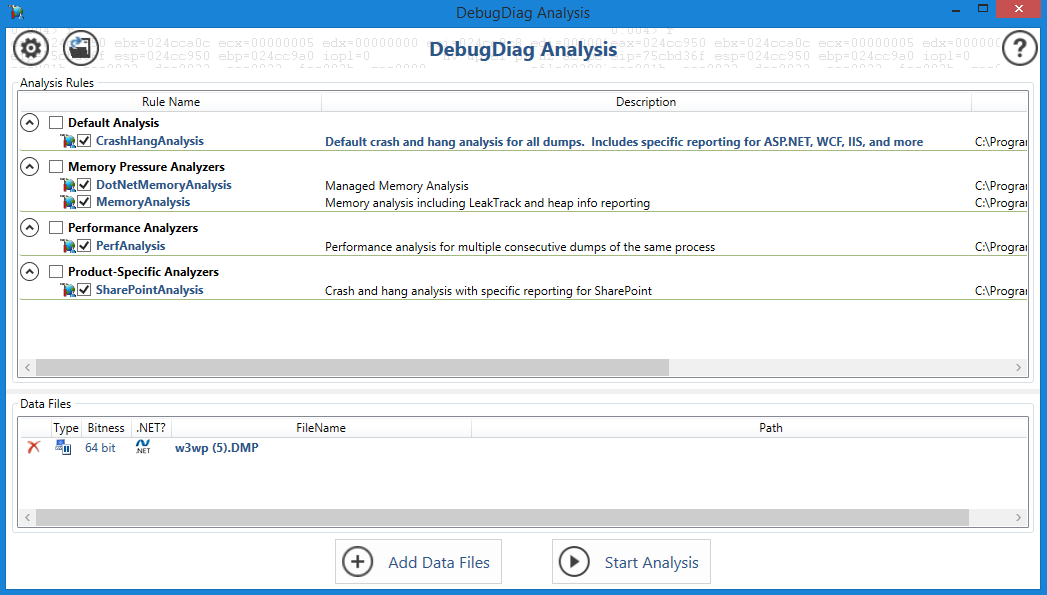

Using Microsoft's Debug Diagnostic Tool v2, I opened one of the memory dumps I created.

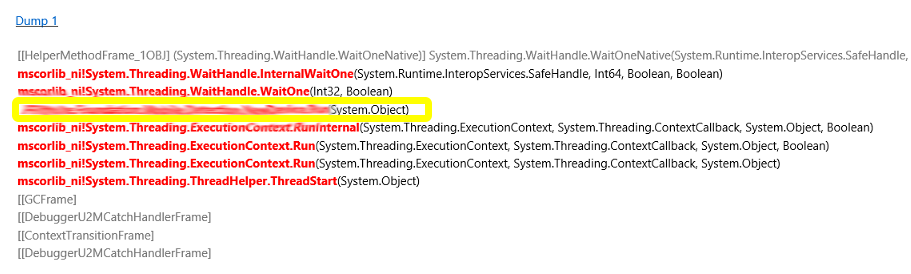

During a particular memory dump, I stummbled upon a Wait Call originating from multiple calls from a dll. This dll was.... actually an un-used dll, whose features were actually no longer supported by that version of application (it was upgraded a year or two ago by a different company).

(Note: I blured out the details of the module because I didn't research if the issue was with the module or was because it was running on an incompatible version)

Although, once again, not the cause of the original issue I was looking for, I removed this unnessary module to help with performance in general. There really is no need to have pipelines linked to dlls whose features do not even exist in that version of software.

So, I continued along my path of looking into the memory dumps. #downtherabibithole

Rabbit Hole #4: Single User doing Multiple Things?

One day, I managed to create a memory dump when the Live website was performing horribly and spinning.

And I noticed something strange....

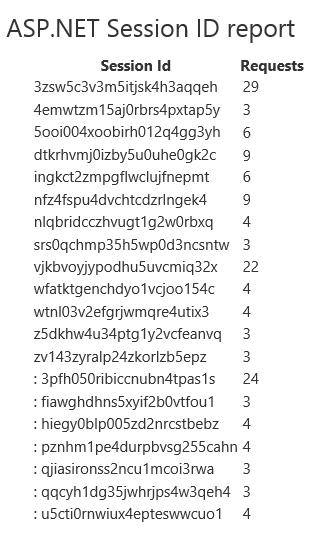

I opened it in DebugDiag and saw there were a few instances where there were a lot of calls, about 20 to 30, from the same Session ID sitting in the memory dump. While the other Session IDs did not have nearly this amount. They had maybe between 3 and 8.

Looking into these large quantity of requests, I noticed that majority of the calls were multiple calls again and again.

I reset the server. And after a few hours. Again, the issue. And again, about 20 or 30 calls from the same Session ID in the memory.

Was it possible that a user doing multiple things on the website at once... was bringing the website to a hault? Was it possible that there was an issue in the code? Or a single action causing numerous requests to the same pages, and then hung up the website? #unansweredquestions

I looked at the calls that those Sessions IDs were performing, and noticed that there were numerous "Add to Cart" events after they performed a single "Quick Order" event. But I was unsure if this was do to a code issue (the code creating multiples of calls), or if the user had many tabs opened in the same browser.

After some email exchanges and looking at the code, I realized it wasn't the code itself causing multiple calls...

Rabbit Hole #5: Single Scenario

What was the issue then?

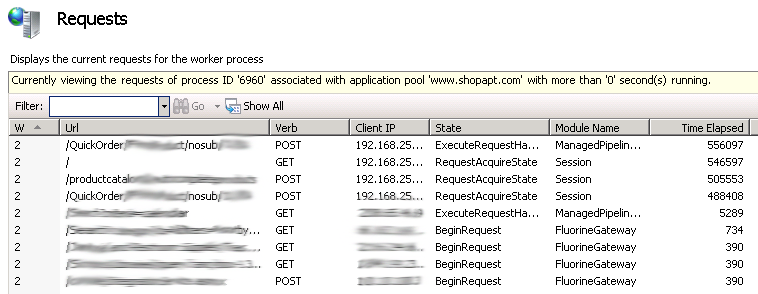

From looking at what was going on with #4, I looked at the Session ID that had so many calls. And looked up at the first call that was the longest call in the memory.

And there was the issue. It was a "Quick Order" action, where there was no data being passed.

During an instance where the website was performing badly again, I opened up the Worker Processes (as performed in #2), and saw the same results. And sure enough, a single Quick Order action held up numerous requests for that user because of no data being passed.

After finding out this, I looked at the code and the places where this functionality was being used. This had to be a code issue, and a certain way the application is touched by the user, that would break the website.

I made a discovery. In a certain scenario where you did a few actions, then skipped a step and didn't select something, and then press "Add to Cart"... And there it was. It hung my local and my local became unresponsive. The call never completed, and only thing that could bring my local back was an IIS Reset.

I noticed that when my website hung, I could click anything, and those calls would never come back. So the "multiple calls" seen in #4 were from the user trying to get the website to work and kept clicking on buttons.

The issue was the Javascript didn't check for a value before the data was posted to the URL. When it was posted with no data, the application would go to in endless loop and continue searching and spike out the memory to an outrageous amount. #questionssolved

Some Food for Thought

After all of this research and all of these rabbit holes, I had a few take-aways and things we should always consider when working on projects. Whether during our development or during research of a project developed by another company.

- Don't forgot to follow recommended standards.

The robots could have been blocked by a ReCaptcha, and ReCaptcha is a commonly recommended feature.

- Proper cleanup after upgrades.

When upgrades happen, don't forget to do an inventory of the currently installed modules. Upgrade what is needed, remove the rest.

- Proper test cases.

Test cases should try and break the website, before a user in Live breaks it.